mirror of

https://github.com/superseriousbusiness/gotosocial.git

synced 2025-12-14 00:37:27 -06:00

[chore] bump dependencies (#4339)

- github.com/KimMachineGun/automemlimit v0.7.4 - github.com/miekg/dns v1.1.67 - github.com/minio/minio-go/v7 v7.0.95 - github.com/spf13/pflag v1.0.7 - github.com/tdewolff/minify/v2 v2.23.9 - github.com/uptrace/bun v1.2.15 - github.com/uptrace/bun/dialect/pgdialect v1.2.15 - github.com/uptrace/bun/dialect/sqlitedialect v1.2.15 - github.com/uptrace/bun/extra/bunotel v1.2.15 - golang.org/x/image v0.29.0 - golang.org/x/net v0.42.0 Reviewed-on: https://codeberg.org/superseriousbusiness/gotosocial/pulls/4339 Co-authored-by: kim <grufwub@gmail.com> Co-committed-by: kim <grufwub@gmail.com>

This commit is contained in:

parent

eb60081985

commit

c00cad2ceb

76 changed files with 5544 additions and 886 deletions

34

go.mod

34

go.mod

|

|

@ -34,7 +34,7 @@ require (

|

|||

codeberg.org/gruf/go-storage v0.3.1

|

||||

codeberg.org/gruf/go-structr v0.9.7

|

||||

github.com/DmitriyVTitov/size v1.5.0

|

||||

github.com/KimMachineGun/automemlimit v0.7.3

|

||||

github.com/KimMachineGun/automemlimit v0.7.4

|

||||

github.com/SherClockHolmes/webpush-go v1.4.0

|

||||

github.com/buckket/go-blurhash v1.1.0

|

||||

github.com/coreos/go-oidc/v3 v3.14.1

|

||||

|

|

@ -51,8 +51,8 @@ require (

|

|||

github.com/jackc/pgx/v5 v5.7.5

|

||||

github.com/k3a/html2text v1.2.1

|

||||

github.com/microcosm-cc/bluemonday v1.0.27

|

||||

github.com/miekg/dns v1.1.66

|

||||

github.com/minio/minio-go/v7 v7.0.94

|

||||

github.com/miekg/dns v1.1.67

|

||||

github.com/minio/minio-go/v7 v7.0.95

|

||||

github.com/mitchellh/mapstructure v1.5.0

|

||||

github.com/ncruces/go-sqlite3 v0.27.1

|

||||

github.com/oklog/ulid v1.3.1

|

||||

|

|

@ -60,19 +60,19 @@ require (

|

|||

github.com/rivo/uniseg v0.4.7

|

||||

github.com/spf13/cast v1.9.2

|

||||

github.com/spf13/cobra v1.9.1

|

||||

github.com/spf13/pflag v1.0.6

|

||||

github.com/spf13/pflag v1.0.7

|

||||

github.com/spf13/viper v1.20.1

|

||||

github.com/stretchr/testify v1.10.0

|

||||

github.com/tdewolff/minify/v2 v2.23.8

|

||||

github.com/tdewolff/minify/v2 v2.23.9

|

||||

github.com/technologize/otel-go-contrib v1.1.1

|

||||

github.com/temoto/robotstxt v1.1.2

|

||||

github.com/tetratelabs/wazero v1.9.0

|

||||

github.com/tomnomnom/linkheader v0.0.0-20180905144013-02ca5825eb80

|

||||

github.com/ulule/limiter/v3 v3.11.2

|

||||

github.com/uptrace/bun v1.2.14

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.14

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.14

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.14

|

||||

github.com/uptrace/bun v1.2.15

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.15

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.15

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.15

|

||||

github.com/wagslane/go-password-validator v0.3.0

|

||||

github.com/yuin/goldmark v1.7.12

|

||||

go.opentelemetry.io/contrib/exporters/autoexport v0.62.0

|

||||

|

|

@ -84,8 +84,8 @@ require (

|

|||

go.opentelemetry.io/otel/trace v1.37.0

|

||||

go.uber.org/automaxprocs v1.6.0

|

||||

golang.org/x/crypto v0.40.0

|

||||

golang.org/x/image v0.28.0

|

||||

golang.org/x/net v0.41.0

|

||||

golang.org/x/image v0.29.0

|

||||

golang.org/x/net v0.42.0

|

||||

golang.org/x/oauth2 v0.30.0

|

||||

golang.org/x/sys v0.34.0

|

||||

golang.org/x/text v0.27.0

|

||||

|

|

@ -153,6 +153,7 @@ require (

|

|||

github.com/gorilla/handlers v1.5.2 // indirect

|

||||

github.com/gorilla/securecookie v1.1.2 // indirect

|

||||

github.com/gorilla/sessions v1.4.0 // indirect

|

||||

github.com/grafana/regexp v0.0.0-20240518133315-a468a5bfb3bc // indirect

|

||||

github.com/grpc-ecosystem/grpc-gateway/v2 v2.27.1 // indirect

|

||||

github.com/huandu/xstrings v1.4.0 // indirect

|

||||

github.com/imdario/mergo v0.3.16 // indirect

|

||||

|

|

@ -165,13 +166,13 @@ require (

|

|||

github.com/josharian/intern v1.0.0 // indirect

|

||||

github.com/json-iterator/go v1.1.12 // indirect

|

||||

github.com/klauspost/compress v1.18.0 // indirect

|

||||

github.com/klauspost/cpuid/v2 v2.2.10 // indirect

|

||||

github.com/klauspost/cpuid/v2 v2.2.11 // indirect

|

||||

github.com/kr/pretty v0.3.1 // indirect

|

||||

github.com/kr/text v0.2.0 // indirect

|

||||

github.com/leodido/go-urn v1.4.0 // indirect

|

||||

github.com/mailru/easyjson v0.7.7 // indirect

|

||||

github.com/mattn/go-isatty v0.0.20 // indirect

|

||||

github.com/minio/crc64nvme v1.0.1 // indirect

|

||||

github.com/minio/crc64nvme v1.0.2 // indirect

|

||||

github.com/minio/md5-simd v1.1.2 // indirect

|

||||

github.com/mitchellh/copystructure v1.2.0 // indirect

|

||||

github.com/mitchellh/reflectwalk v1.0.2 // indirect

|

||||

|

|

@ -182,13 +183,14 @@ require (

|

|||

github.com/ncruces/julianday v1.0.0 // indirect

|

||||

github.com/pbnjay/memory v0.0.0-20210728143218-7b4eea64cf58 // indirect

|

||||

github.com/pelletier/go-toml/v2 v2.2.4 // indirect

|

||||

github.com/philhofer/fwd v1.1.3-0.20240916144458-20a13a1f6b7c // indirect

|

||||

github.com/philhofer/fwd v1.2.0 // indirect

|

||||

github.com/pkg/errors v0.9.1 // indirect

|

||||

github.com/pmezard/go-difflib v1.0.1-0.20181226105442-5d4384ee4fb2 // indirect

|

||||

github.com/prometheus/client_golang v1.22.0 // indirect

|

||||

github.com/prometheus/client_model v0.6.2 // indirect

|

||||

github.com/prometheus/common v0.65.0 // indirect

|

||||

github.com/prometheus/procfs v0.16.1 // indirect

|

||||

github.com/prometheus/otlptranslator v0.0.0-20250717125610-8549f4ab4f8f // indirect

|

||||

github.com/prometheus/procfs v0.17.0 // indirect

|

||||

github.com/puzpuzpuz/xsync/v3 v3.5.1 // indirect

|

||||

github.com/quasoft/memstore v0.0.0-20191010062613-2bce066d2b0b // indirect

|

||||

github.com/remyoudompheng/bigfft v0.0.0-20230129092748-24d4a6f8daec // indirect

|

||||

|

|

@ -218,7 +220,7 @@ require (

|

|||

go.opentelemetry.io/otel/exporters/otlp/otlptrace v1.37.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc v1.37.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracehttp v1.37.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.1 // indirect

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdoutlog v0.13.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdoutmetric v1.37.0 // indirect

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdouttrace v1.37.0 // indirect

|

||||

|

|

|

|||

67

go.sum

generated

67

go.sum

generated

|

|

@ -58,8 +58,8 @@ codeberg.org/superseriousbusiness/go-swagger v0.32.3-gts-go1.23-fix h1:k76/Th+br

|

|||

codeberg.org/superseriousbusiness/go-swagger v0.32.3-gts-go1.23-fix/go.mod h1:lAwO1nKff3qNRJYVQeTCl1am5pcNiiA2VyDf8TqzS24=

|

||||

github.com/DmitriyVTitov/size v1.5.0 h1:/PzqxYrOyOUX1BXj6J9OuVRVGe+66VL4D9FlUaW515g=

|

||||

github.com/DmitriyVTitov/size v1.5.0/go.mod h1:le6rNI4CoLQV1b9gzp1+3d7hMAD/uu2QcJ+aYbNgiU0=

|

||||

github.com/KimMachineGun/automemlimit v0.7.3 h1:oPgMp0bsWez+4fvgSa11Rd9nUDrd8RLtDjBoT3ro+/A=

|

||||

github.com/KimMachineGun/automemlimit v0.7.3/go.mod h1:QZxpHaGOQoYvFhv/r4u3U0JTC2ZcOwbSr11UZF46UBM=

|

||||

github.com/KimMachineGun/automemlimit v0.7.4 h1:UY7QYOIfrr3wjjOAqahFmC3IaQCLWvur9nmfIn6LnWk=

|

||||

github.com/KimMachineGun/automemlimit v0.7.4/go.mod h1:QZxpHaGOQoYvFhv/r4u3U0JTC2ZcOwbSr11UZF46UBM=

|

||||

github.com/Masterminds/goutils v1.1.1 h1:5nUrii3FMTL5diU80unEVvNevw1nH4+ZV4DSLVJLSYI=

|

||||

github.com/Masterminds/goutils v1.1.1/go.mod h1:8cTjp+g8YejhMuvIA5y2vz3BpJxksy863GQaJW2MFNU=

|

||||

github.com/Masterminds/semver/v3 v3.2.0/go.mod h1:qvl/7zhW3nngYb5+80sSMF+FG2BjYrf8m9wsX0PNOMQ=

|

||||

|

|

@ -249,6 +249,8 @@ github.com/gorilla/sessions v1.4.0 h1:kpIYOp/oi6MG/p5PgxApU8srsSw9tuFbt46Lt7auzq

|

|||

github.com/gorilla/sessions v1.4.0/go.mod h1:FLWm50oby91+hl7p/wRxDth9bWSuk0qVL2emc7lT5ik=

|

||||

github.com/gorilla/websocket v1.5.3 h1:saDtZ6Pbx/0u+bgYQ3q96pZgCzfhKXGPqt7kZ72aNNg=

|

||||

github.com/gorilla/websocket v1.5.3/go.mod h1:YR8l580nyteQvAITg2hZ9XVh4b55+EU/adAjf1fMHhE=

|

||||

github.com/grafana/regexp v0.0.0-20240518133315-a468a5bfb3bc h1:GN2Lv3MGO7AS6PrRoT6yV5+wkrOpcszoIsO4+4ds248=

|

||||

github.com/grafana/regexp v0.0.0-20240518133315-a468a5bfb3bc/go.mod h1:+JKpmjMGhpgPL+rXZ5nsZieVzvarn86asRlBg4uNGnk=

|

||||

github.com/grpc-ecosystem/grpc-gateway/v2 v2.27.1 h1:X5VWvz21y3gzm9Nw/kaUeku/1+uBhcekkmy4IkffJww=

|

||||

github.com/grpc-ecosystem/grpc-gateway/v2 v2.27.1/go.mod h1:Zanoh4+gvIgluNqcfMVTJueD4wSS5hT7zTt4Mrutd90=

|

||||

github.com/huandu/xstrings v1.3.3/go.mod h1:y5/lhBue+AyNmUVz9RLU9xbLR0o4KIIExikq4ovT0aE=

|

||||

|

|

@ -286,8 +288,8 @@ github.com/klauspost/compress v1.18.0 h1:c/Cqfb0r+Yi+JtIEq73FWXVkRonBlf0CRNYc8Zt

|

|||

github.com/klauspost/compress v1.18.0/go.mod h1:2Pp+KzxcywXVXMr50+X0Q/Lsb43OQHYWRCY2AiWywWQ=

|

||||

github.com/klauspost/cpuid/v2 v2.0.1/go.mod h1:FInQzS24/EEf25PyTYn52gqo7WaD8xa0213Md/qVLRg=

|

||||

github.com/klauspost/cpuid/v2 v2.0.9/go.mod h1:FInQzS24/EEf25PyTYn52gqo7WaD8xa0213Md/qVLRg=

|

||||

github.com/klauspost/cpuid/v2 v2.2.10 h1:tBs3QSyvjDyFTq3uoc/9xFpCuOsJQFNPiAhYdw2skhE=

|

||||

github.com/klauspost/cpuid/v2 v2.2.10/go.mod h1:hqwkgyIinND0mEev00jJYCxPNVRVXFQeu1XKlok6oO0=

|

||||

github.com/klauspost/cpuid/v2 v2.2.11 h1:0OwqZRYI2rFrjS4kvkDnqJkKHdHaRnCm68/DY4OxRzU=

|

||||

github.com/klauspost/cpuid/v2 v2.2.11/go.mod h1:hqwkgyIinND0mEev00jJYCxPNVRVXFQeu1XKlok6oO0=

|

||||

github.com/knz/go-libedit v1.10.1/go.mod h1:MZTVkCWyz0oBc7JOWP3wNAzd002ZbM/5hgShxwh4x8M=

|

||||

github.com/kr/pretty v0.3.1 h1:flRD4NNwYAUpkphVc1HcthR4KEIFJ65n8Mw5qdRn3LE=

|

||||

github.com/kr/pretty v0.3.1/go.mod h1:hoEshYVHaxMs3cyo3Yncou5ZscifuDolrwPKZanG3xk=

|

||||

|

|

@ -305,14 +307,14 @@ github.com/mattn/go-isatty v0.0.20 h1:xfD0iDuEKnDkl03q4limB+vH+GxLEtL/jb4xVJSWWE

|

|||

github.com/mattn/go-isatty v0.0.20/go.mod h1:W+V8PltTTMOvKvAeJH7IuucS94S2C6jfK/D7dTCTo3Y=

|

||||

github.com/microcosm-cc/bluemonday v1.0.27 h1:MpEUotklkwCSLeH+Qdx1VJgNqLlpY2KXwXFM08ygZfk=

|

||||

github.com/microcosm-cc/bluemonday v1.0.27/go.mod h1:jFi9vgW+H7c3V0lb6nR74Ib/DIB5OBs92Dimizgw2cA=

|

||||

github.com/miekg/dns v1.1.66 h1:FeZXOS3VCVsKnEAd+wBkjMC3D2K+ww66Cq3VnCINuJE=

|

||||

github.com/miekg/dns v1.1.66/go.mod h1:jGFzBsSNbJw6z1HYut1RKBKHA9PBdxeHrZG8J+gC2WE=

|

||||

github.com/minio/crc64nvme v1.0.1 h1:DHQPrYPdqK7jQG/Ls5CTBZWeex/2FMS3G5XGkycuFrY=

|

||||

github.com/minio/crc64nvme v1.0.1/go.mod h1:eVfm2fAzLlxMdUGc0EEBGSMmPwmXD5XiNRpnu9J3bvg=

|

||||

github.com/miekg/dns v1.1.67 h1:kg0EHj0G4bfT5/oOys6HhZw4vmMlnoZ+gDu8tJ/AlI0=

|

||||

github.com/miekg/dns v1.1.67/go.mod h1:fujopn7TB3Pu3JM69XaawiU0wqjpL9/8xGop5UrTPps=

|

||||

github.com/minio/crc64nvme v1.0.2 h1:6uO1UxGAD+kwqWWp7mBFsi5gAse66C4NXO8cmcVculg=

|

||||

github.com/minio/crc64nvme v1.0.2/go.mod h1:eVfm2fAzLlxMdUGc0EEBGSMmPwmXD5XiNRpnu9J3bvg=

|

||||

github.com/minio/md5-simd v1.1.2 h1:Gdi1DZK69+ZVMoNHRXJyNcxrMA4dSxoYHZSQbirFg34=

|

||||

github.com/minio/md5-simd v1.1.2/go.mod h1:MzdKDxYpY2BT9XQFocsiZf/NKVtR7nkE4RoEpN+20RM=

|

||||

github.com/minio/minio-go/v7 v7.0.94 h1:1ZoksIKPyaSt64AVOyaQvhDOgVC3MfZsWM6mZXRUGtM=

|

||||

github.com/minio/minio-go/v7 v7.0.94/go.mod h1:71t2CqDt3ThzESgZUlU1rBN54mksGGlkLcFgguDnnAc=

|

||||

github.com/minio/minio-go/v7 v7.0.95 h1:ywOUPg+PebTMTzn9VDsoFJy32ZuARN9zhB+K3IYEvYU=

|

||||

github.com/minio/minio-go/v7 v7.0.95/go.mod h1:wOOX3uxS334vImCNRVyIDdXX9OsXDm89ToynKgqUKlo=

|

||||

github.com/mitchellh/copystructure v1.0.0/go.mod h1:SNtv71yrdKgLRyLFxmLdkAbkKEFWgYaq1OVrnRcwhnw=

|

||||

github.com/mitchellh/copystructure v1.2.0 h1:vpKXTN4ewci03Vljg/q9QvCGUDttBOGBIa15WveJJGw=

|

||||

github.com/mitchellh/copystructure v1.2.0/go.mod h1:qLl+cE2AmVv+CoeAwDPye/v+N2HKCj9FbZEVFJRxO9s=

|

||||

|

|

@ -344,8 +346,8 @@ github.com/pbnjay/memory v0.0.0-20210728143218-7b4eea64cf58 h1:onHthvaw9LFnH4t2D

|

|||

github.com/pbnjay/memory v0.0.0-20210728143218-7b4eea64cf58/go.mod h1:DXv8WO4yhMYhSNPKjeNKa5WY9YCIEBRbNzFFPJbWO6Y=

|

||||

github.com/pelletier/go-toml/v2 v2.2.4 h1:mye9XuhQ6gvn5h28+VilKrrPoQVanw5PMw/TB0t5Ec4=

|

||||

github.com/pelletier/go-toml/v2 v2.2.4/go.mod h1:2gIqNv+qfxSVS7cM2xJQKtLSTLUE9V8t9Stt+h56mCY=

|

||||

github.com/philhofer/fwd v1.1.3-0.20240916144458-20a13a1f6b7c h1:dAMKvw0MlJT1GshSTtih8C2gDs04w8dReiOGXrGLNoY=

|

||||

github.com/philhofer/fwd v1.1.3-0.20240916144458-20a13a1f6b7c/go.mod h1:RqIHx9QI14HlwKwm98g9Re5prTQ6LdeRQn+gXJFxsJM=

|

||||

github.com/philhofer/fwd v1.2.0 h1:e6DnBTl7vGY+Gz322/ASL4Gyp1FspeMvx1RNDoToZuM=

|

||||

github.com/philhofer/fwd v1.2.0/go.mod h1:RqIHx9QI14HlwKwm98g9Re5prTQ6LdeRQn+gXJFxsJM=

|

||||

github.com/pkg/diff v0.0.0-20210226163009-20ebb0f2a09e/go.mod h1:pJLUxLENpZxwdsKMEsNbx1VGcRFpLqf3715MtcvvzbA=

|

||||

github.com/pkg/errors v0.9.1 h1:FEBLx1zS214owpjy7qsBeixbURkuhQAwrK5UwLGTwt4=

|

||||

github.com/pkg/errors v0.9.1/go.mod h1:bwawxfHBFNV+L2hUp1rHADufV3IMtnDRdf1r5NINEl0=

|

||||

|

|

@ -362,8 +364,10 @@ github.com/prometheus/client_model v0.6.2 h1:oBsgwpGs7iVziMvrGhE53c/GrLUsZdHnqNw

|

|||

github.com/prometheus/client_model v0.6.2/go.mod h1:y3m2F6Gdpfy6Ut/GBsUqTWZqCUvMVzSfMLjcu6wAwpE=

|

||||

github.com/prometheus/common v0.65.0 h1:QDwzd+G1twt//Kwj/Ww6E9FQq1iVMmODnILtW1t2VzE=

|

||||

github.com/prometheus/common v0.65.0/go.mod h1:0gZns+BLRQ3V6NdaerOhMbwwRbNh9hkGINtQAsP5GS8=

|

||||

github.com/prometheus/procfs v0.16.1 h1:hZ15bTNuirocR6u0JZ6BAHHmwS1p8B4P6MRqxtzMyRg=

|

||||

github.com/prometheus/procfs v0.16.1/go.mod h1:teAbpZRB1iIAJYREa1LsoWUXykVXA1KlTmWl8x/U+Is=

|

||||

github.com/prometheus/otlptranslator v0.0.0-20250717125610-8549f4ab4f8f h1:QQB6SuvGZjK8kdc2YaLJpYhV8fxauOsjE6jgcL6YJ8Q=

|

||||

github.com/prometheus/otlptranslator v0.0.0-20250717125610-8549f4ab4f8f/go.mod h1:P8AwMgdD7XEr6QRUJ2QWLpiAZTgTE2UYgjlu3svompI=

|

||||

github.com/prometheus/procfs v0.17.0 h1:FuLQ+05u4ZI+SS/w9+BWEM2TXiHKsUQ9TADiRH7DuK0=

|

||||

github.com/prometheus/procfs v0.17.0/go.mod h1:oPQLaDAMRbA+u8H5Pbfq+dl3VDAvHxMUOVhe0wYB2zw=

|

||||

github.com/puzpuzpuz/xsync/v3 v3.5.1 h1:GJYJZwO6IdxN/IKbneznS6yPkVC+c3zyY/j19c++5Fg=

|

||||

github.com/puzpuzpuz/xsync/v3 v3.5.1/go.mod h1:VjzYrABPabuM4KyBh1Ftq6u8nhwY5tBPKP9jpmh0nnA=

|

||||

github.com/quasoft/memstore v0.0.0-20191010062613-2bce066d2b0b h1:aUNXCGgukb4gtY99imuIeoh8Vr0GSwAlYxPAhqZrpFc=

|

||||

|

|

@ -402,8 +406,9 @@ github.com/spf13/cast v1.9.2 h1:SsGfm7M8QOFtEzumm7UZrZdLLquNdzFYfIbEXntcFbE=

|

|||

github.com/spf13/cast v1.9.2/go.mod h1:jNfB8QC9IA6ZuY2ZjDp0KtFO2LZZlg4S/7bzP6qqeHo=

|

||||

github.com/spf13/cobra v1.9.1 h1:CXSaggrXdbHK9CF+8ywj8Amf7PBRmPCOJugH954Nnlo=

|

||||

github.com/spf13/cobra v1.9.1/go.mod h1:nDyEzZ8ogv936Cinf6g1RU9MRY64Ir93oCnqb9wxYW0=

|

||||

github.com/spf13/pflag v1.0.6 h1:jFzHGLGAlb3ruxLB8MhbI6A8+AQX/2eW4qeyNZXNp2o=

|

||||

github.com/spf13/pflag v1.0.6/go.mod h1:McXfInJRrz4CZXVZOBLb0bTZqETkiAhM9Iw0y3An2Bg=

|

||||

github.com/spf13/pflag v1.0.7 h1:vN6T9TfwStFPFM5XzjsvmzZkLuaLX+HS+0SeFLRgU6M=

|

||||

github.com/spf13/pflag v1.0.7/go.mod h1:McXfInJRrz4CZXVZOBLb0bTZqETkiAhM9Iw0y3An2Bg=

|

||||

github.com/spf13/viper v1.20.1 h1:ZMi+z/lvLyPSCoNtFCpqjy0S4kPbirhpTMwl8BkW9X4=

|

||||

github.com/spf13/viper v1.20.1/go.mod h1:P9Mdzt1zoHIG8m2eZQinpiBjo6kCmZSKBClNNqjJvu4=

|

||||

github.com/stretchr/objx v0.1.0/go.mod h1:HFkY916IF+rwdDfMAkV7OtwuqBVzrE8GR6GFx+wExME=

|

||||

|

|

@ -420,8 +425,8 @@ github.com/stretchr/testify v1.10.0 h1:Xv5erBjTwe/5IxqUQTdXv5kgmIvbHo3QQyRwhJsOf

|

|||

github.com/stretchr/testify v1.10.0/go.mod h1:r2ic/lqez/lEtzL7wO/rwa5dbSLXVDPFyf8C91i36aY=

|

||||

github.com/subosito/gotenv v1.6.0 h1:9NlTDc1FTs4qu0DDq7AEtTPNw6SVm7uBMsUCUjABIf8=

|

||||

github.com/subosito/gotenv v1.6.0/go.mod h1:Dk4QP5c2W3ibzajGcXpNraDfq2IrhjMIvMSWPKKo0FU=

|

||||

github.com/tdewolff/minify/v2 v2.23.8 h1:tvjHzRer46kwOfpdCBCWsDblCw3QtnLJRd61pTVkyZ8=

|

||||

github.com/tdewolff/minify/v2 v2.23.8/go.mod h1:VW3ISUd3gDOZuQ/jwZr4sCzsuX+Qvsx87FDMjk6Rvno=

|

||||

github.com/tdewolff/minify/v2 v2.23.9 h1:s8hX6wQzOqmanyLxmlynInRPVgZ/xASy6sUHfGsW6kU=

|

||||

github.com/tdewolff/minify/v2 v2.23.9/go.mod h1:VW3ISUd3gDOZuQ/jwZr4sCzsuX+Qvsx87FDMjk6Rvno=

|

||||

github.com/tdewolff/parse/v2 v2.8.1 h1:J5GSHru6o3jF1uLlEKVXkDxxcVx6yzOlIVIotK4w2po=

|

||||

github.com/tdewolff/parse/v2 v2.8.1/go.mod h1:Hwlni2tiVNKyzR1o6nUs4FOF07URA+JLBLd6dlIXYqo=

|

||||

github.com/tdewolff/test v1.0.11 h1:FdLbwQVHxqG16SlkGveC0JVyrJN62COWTRyUFzfbtBE=

|

||||

|

|

@ -462,14 +467,14 @@ github.com/ugorji/go/codec v1.3.0 h1:Qd2W2sQawAfG8XSvzwhBeoGq71zXOC/Q1E9y/wUcsUA

|

|||

github.com/ugorji/go/codec v1.3.0/go.mod h1:pRBVtBSKl77K30Bv8R2P+cLSGaTtex6fsA2Wjqmfxj4=

|

||||

github.com/ulule/limiter/v3 v3.11.2 h1:P4yOrxoEMJbOTfRJR2OzjL90oflzYPPmWg+dvwN2tHA=

|

||||

github.com/ulule/limiter/v3 v3.11.2/go.mod h1:QG5GnFOCV+k7lrL5Y8kgEeeflPH3+Cviqlqa8SVSQxI=

|

||||

github.com/uptrace/bun v1.2.14 h1:5yFSfi/yVWEzQ2lAaHz+JfWN9AHmqYtNmlbaUbAp3rU=

|

||||

github.com/uptrace/bun v1.2.14/go.mod h1:ZS4nPaEv2Du3OFqAD/irk3WVP6xTB3/9TWqjJbgKYBU=

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.14 h1:1jmCn7zcYIJDSk1pJO//b11k9NQP1rpWZoyxfoNdpzI=

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.14/go.mod h1:MrRlsIpWIyOCNosWuG8bVtLb80JyIER5ci0VlTa38dU=

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.14 h1:eLXmNpy2TSsWJNpyIIIeLBa5M+Xxc4n8jX5ASeuvWrg=

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.14/go.mod h1:oORBd9Y7RiAOHAshjuebSFNPZNPLXYcvEWmibuJ8RRk=

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.14 h1:LPg/1kEOcwex5w7+Boh6Rdc3xi1PuMVZV06isOPEPaU=

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.14/go.mod h1:V509v+akUAx31NbN96WEhkY+rBPJxI0Ul+beKNN1Ato=

|

||||

github.com/uptrace/bun v1.2.15 h1:Ut68XRBLDgp9qG9QBMa9ELWaZOmzHNdczHQdrOZbEFE=

|

||||

github.com/uptrace/bun v1.2.15/go.mod h1:Eghz7NonZMiTX/Z6oKYytJ0oaMEJ/eq3kEV4vSqG038=

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.15 h1:er+/3giAIqpfrXJw+KP9B7ujyQIi5XkPnFmgjAVL6bA=

|

||||

github.com/uptrace/bun/dialect/pgdialect v1.2.15/go.mod h1:QSiz6Qpy9wlGFsfpf7UMSL6mXAL1jDJhFwuOVacCnOQ=

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.15 h1:7upGMVjFRB1oI78GQw6ruNLblYn5CR+kxqcbbeBBils=

|

||||

github.com/uptrace/bun/dialect/sqlitedialect v1.2.15/go.mod h1:c7YIDaPNS2CU2uI1p7umFuFWkuKbDcPDDvp+DLHZnkI=

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.15 h1:6KAvKRpH9BC/7n3eMXVgDYLqghHf2H3FJOvxs/yjFJM=

|

||||

github.com/uptrace/bun/extra/bunotel v1.2.15/go.mod h1:qnASdcJVuoEE+13N3Gd8XHi5gwCydt2S1TccJnefH2k=

|

||||

github.com/uptrace/opentelemetry-go-extra/otelsql v0.3.2 h1:ZjUj9BLYf9PEqBn8W/OapxhPjVRdC6CsXTdULHsyk5c=

|

||||

github.com/uptrace/opentelemetry-go-extra/otelsql v0.3.2/go.mod h1:O8bHQfyinKwTXKkiKNGmLQS7vRsqRxIQTFZpYpHK3IQ=

|

||||

github.com/valyala/bytebufferpool v1.0.0 h1:GqA5TC/0021Y/b9FG4Oi9Mr3q7XYx6KllzawFIhcdPw=

|

||||

|

|

@ -527,8 +532,8 @@ go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc v1.37.0 h1:EtFWS

|

|||

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc v1.37.0/go.mod h1:QjUEoiGCPkvFZ/MjK6ZZfNOS6mfVEVKYE99dFhuN2LI=

|

||||

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracehttp v1.37.0 h1:bDMKF3RUSxshZ5OjOTi8rsHGaPKsAt76FaqgvIUySLc=

|

||||

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracehttp v1.37.0/go.mod h1:dDT67G/IkA46Mr2l9Uj7HsQVwsjASyV9SjGofsiUZDA=

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.0 h1:HHf+wKS6o5++XZhS98wvILrLVgHxjA/AMjqHKes+uzo=

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.0/go.mod h1:R8GpRXTZrqvXHDEGVH5bF6+JqAZcK8PjJcZ5nGhEWiE=

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.1 h1:HcpSkTkJbggT8bjYP+BjyqPWlD17BH9C5CYNKeDzmcA=

|

||||

go.opentelemetry.io/otel/exporters/prometheus v0.59.1/go.mod h1:0FJL+gjuUoM07xzik3KPBaN+nz/CoB15kV6WLMiXZag=

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdoutlog v0.13.0 h1:yEX3aC9KDgvYPhuKECHbOlr5GLwH6KTjLJ1sBSkkxkc=

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdoutlog v0.13.0/go.mod h1:/GXR0tBmmkxDaCUGahvksvp66mx4yh5+cFXgSlhg0vQ=

|

||||

go.opentelemetry.io/otel/exporters/stdout/stdoutmetric v1.37.0 h1:6VjV6Et+1Hd2iLZEPtdV7vie80Yyqf7oikJLjQ/myi0=

|

||||

|

|

@ -570,8 +575,8 @@ golang.org/x/crypto v0.40.0 h1:r4x+VvoG5Fm+eJcxMaY8CQM7Lb0l1lsmjGBQ6s8BfKM=

|

|||

golang.org/x/crypto v0.40.0/go.mod h1:Qr1vMER5WyS2dfPHAlsOj01wgLbsyWtFn/aY+5+ZdxY=

|

||||

golang.org/x/exp v0.0.0-20250408133849-7e4ce0ab07d0 h1:R84qjqJb5nVJMxqWYb3np9L5ZsaDtB+a39EqjV0JSUM=

|

||||

golang.org/x/exp v0.0.0-20250408133849-7e4ce0ab07d0/go.mod h1:S9Xr4PYopiDyqSyp5NjCrhFrqg6A5zA2E/iPHPhqnS8=

|

||||

golang.org/x/image v0.28.0 h1:gdem5JW1OLS4FbkWgLO+7ZeFzYtL3xClb97GaUzYMFE=

|

||||

golang.org/x/image v0.28.0/go.mod h1:GUJYXtnGKEUgggyzh+Vxt+AviiCcyiwpsl8iQ8MvwGY=

|

||||

golang.org/x/image v0.29.0 h1:HcdsyR4Gsuys/Axh0rDEmlBmB68rW1U9BUdB3UVHsas=

|

||||

golang.org/x/image v0.29.0/go.mod h1:RVJROnf3SLK8d26OW91j4FrIHGbsJ8QnbEocVTOWQDA=

|

||||

golang.org/x/mod v0.6.0-dev.0.20220419223038-86c51ed26bb4/go.mod h1:jJ57K6gSWd91VN4djpZkiMVwK6gcyfeH4XE8wZrZaV4=

|

||||

golang.org/x/mod v0.8.0/go.mod h1:iBbtSCu2XBx23ZKBPSOrRkjjQPZFPuis4dIYUhu/chs=

|

||||

golang.org/x/mod v0.12.0/go.mod h1:iBbtSCu2XBx23ZKBPSOrRkjjQPZFPuis4dIYUhu/chs=

|

||||

|

|

@ -594,8 +599,8 @@ golang.org/x/net v0.10.0/go.mod h1:0qNGK6F8kojg2nk9dLZ2mShWaEBan6FAoqfSigmmuDg=

|

|||

golang.org/x/net v0.15.0/go.mod h1:idbUs1IY1+zTqbi8yxTbhexhEEk5ur9LInksu6HrEpk=

|

||||

golang.org/x/net v0.21.0/go.mod h1:bIjVDfnllIU7BJ2DNgfnXvpSvtn8VRwhlsaeUTyUS44=

|

||||

golang.org/x/net v0.25.0/go.mod h1:JkAGAh7GEvH74S6FOH42FLoXpXbE/aqXSrIQjXgsiwM=

|

||||

golang.org/x/net v0.41.0 h1:vBTly1HeNPEn3wtREYfy4GZ/NECgw2Cnl+nK6Nz3uvw=

|

||||

golang.org/x/net v0.41.0/go.mod h1:B/K4NNqkfmg07DQYrbwvSluqCJOOXwUjeb/5lOisjbA=

|

||||

golang.org/x/net v0.42.0 h1:jzkYrhi3YQWD6MLBJcsklgQsoAcw89EcZbJw8Z614hs=

|

||||

golang.org/x/net v0.42.0/go.mod h1:FF1RA5d3u7nAYA4z2TkclSCKh68eSXtiFwcWQpPXdt8=

|

||||

golang.org/x/oauth2 v0.30.0 h1:dnDm7JmhM45NNpd8FDDeLhK6FwqbOf4MLCM9zb1BOHI=

|

||||

golang.org/x/oauth2 v0.30.0/go.mod h1:B++QgG3ZKulg6sRPGD/mqlHQs5rB3Ml9erfeDY7xKlU=

|

||||

golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

|

|

|

|||

12

vendor/github.com/KimMachineGun/automemlimit/memlimit/cgroups.go

generated

vendored

12

vendor/github.com/KimMachineGun/automemlimit/memlimit/cgroups.go

generated

vendored

|

|

@ -157,7 +157,7 @@ func getMemoryLimitV1(chs []cgroupHierarchy, mis []mountInfo) (uint64, error) {

|

|||

return 0, err

|

||||

}

|

||||

|

||||

// retrieve the memory limit from the memory.stats and memory.limit_in_bytes files.

|

||||

// retrieve the memory limit from the memory.stat and memory.limit_in_bytes files.

|

||||

return readMemoryLimitV1FromPath(cgroupPath)

|

||||

}

|

||||

|

||||

|

|

@ -173,7 +173,7 @@ func getCgroupV1NoLimit() uint64 {

|

|||

func readMemoryLimitV1FromPath(cgroupPath string) (uint64, error) {

|

||||

// read hierarchical_memory_limit and memory.limit_in_bytes files.

|

||||

// but if hierarchical_memory_limit is not available, then use the max value as a fallback.

|

||||

hml, err := readHierarchicalMemoryLimit(filepath.Join(cgroupPath, "memory.stats"))

|

||||

hml, err := readHierarchicalMemoryLimit(filepath.Join(cgroupPath, "memory.stat"))

|

||||

if err != nil && !errors.Is(err, os.ErrNotExist) {

|

||||

return 0, fmt.Errorf("failed to read hierarchical_memory_limit: %w", err)

|

||||

} else if hml == 0 {

|

||||

|

|

@ -202,8 +202,8 @@ func readMemoryLimitV1FromPath(cgroupPath string) (uint64, error) {

|

|||

return limit, nil

|

||||

}

|

||||

|

||||

// readHierarchicalMemoryLimit extracts hierarchical_memory_limit from memory.stats.

|

||||

// this function expects the path to be memory.stats file.

|

||||

// readHierarchicalMemoryLimit extracts hierarchical_memory_limit from memory.stat.

|

||||

// this function expects the path to be memory.stat file.

|

||||

func readHierarchicalMemoryLimit(path string) (uint64, error) {

|

||||

file, err := os.Open(path)

|

||||

if err != nil {

|

||||

|

|

@ -217,12 +217,12 @@ func readHierarchicalMemoryLimit(path string) (uint64, error) {

|

|||

|

||||

fields := strings.Split(line, " ")

|

||||

if len(fields) < 2 {

|

||||

return 0, fmt.Errorf("failed to parse memory.stats %q: not enough fields", line)

|

||||

return 0, fmt.Errorf("failed to parse memory.stat %q: not enough fields", line)

|

||||

}

|

||||

|

||||

if fields[0] == "hierarchical_memory_limit" {

|

||||

if len(fields) > 2 {

|

||||

return 0, fmt.Errorf("failed to parse memory.stats %q: too many fields for hierarchical_memory_limit", line)

|

||||

return 0, fmt.Errorf("failed to parse memory.stat %q: too many fields for hierarchical_memory_limit", line)

|

||||

}

|

||||

return strconv.ParseUint(fields[1], 10, 64)

|

||||

}

|

||||

|

|

|

|||

15

vendor/github.com/grafana/regexp/.gitignore

generated

vendored

Normal file

15

vendor/github.com/grafana/regexp/.gitignore

generated

vendored

Normal file

|

|

@ -0,0 +1,15 @@

|

|||

# Binaries for programs and plugins

|

||||

*.exe

|

||||

*.exe~

|

||||

*.dll

|

||||

*.so

|

||||

*.dylib

|

||||

|

||||

# Test binary, built with `go test -c`

|

||||

*.test

|

||||

|

||||

# Output of the go coverage tool, specifically when used with LiteIDE

|

||||

*.out

|

||||

|

||||

# Dependency directories (remove the comment below to include it)

|

||||

# vendor/

|

||||

27

vendor/github.com/grafana/regexp/LICENSE

generated

vendored

Normal file

27

vendor/github.com/grafana/regexp/LICENSE

generated

vendored

Normal file

|

|

@ -0,0 +1,27 @@

|

|||

Copyright (c) 2009 The Go Authors. All rights reserved.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without

|

||||

modification, are permitted provided that the following conditions are

|

||||

met:

|

||||

|

||||

* Redistributions of source code must retain the above copyright

|

||||

notice, this list of conditions and the following disclaimer.

|

||||

* Redistributions in binary form must reproduce the above

|

||||

copyright notice, this list of conditions and the following disclaimer

|

||||

in the documentation and/or other materials provided with the

|

||||

distribution.

|

||||

* Neither the name of Google Inc. nor the names of its

|

||||

contributors may be used to endorse or promote products derived from

|

||||

this software without specific prior written permission.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

|

||||

"AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

|

||||

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

|

||||

A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

|

||||

OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

|

||||

SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

|

||||

LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

|

||||

DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

|

||||

THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

|

||||

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

||||

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

12

vendor/github.com/grafana/regexp/README.md

generated

vendored

Normal file

12

vendor/github.com/grafana/regexp/README.md

generated

vendored

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

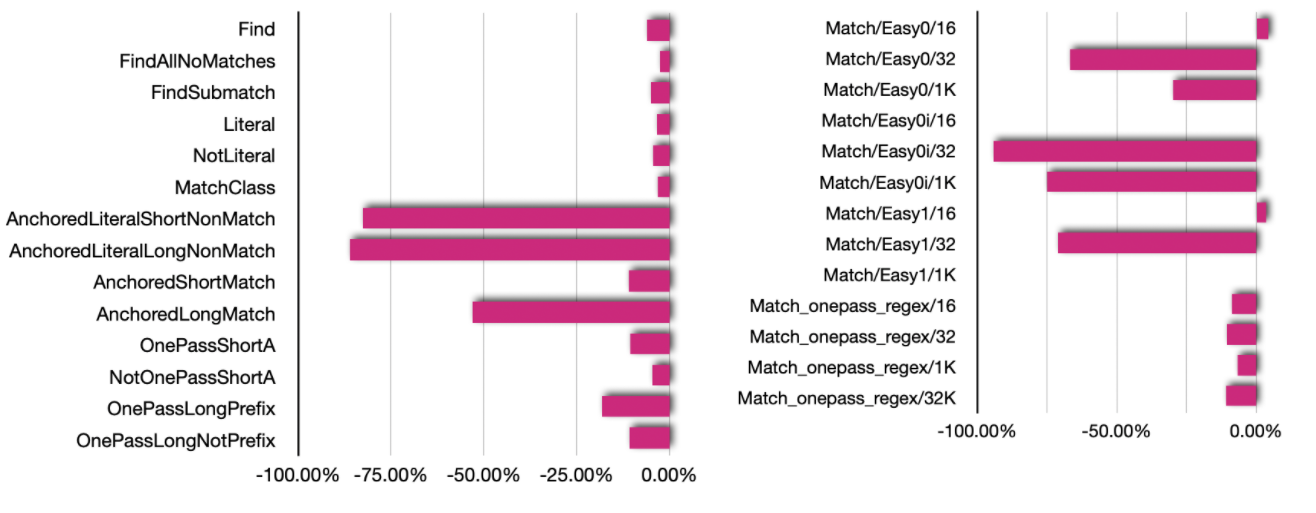

# Grafana Go regexp package

|

||||

This repo is a fork of the upstream Go `regexp` package, with some code optimisations to make it run faster.

|

||||

|

||||

All the optimisations have been submitted upstream, but not yet merged.

|

||||

|

||||

All semantics are the same, and the optimised code passes all tests from upstream.

|

||||

|

||||

The `main` branch is non-optimised: switch over to [`speedup`](https://github.com/grafana/regexp/tree/speedup) branch for the improved code.

|

||||

|

||||

## Benchmarks:

|

||||

|

||||

|

||||

365

vendor/github.com/grafana/regexp/backtrack.go

generated

vendored

Normal file

365

vendor/github.com/grafana/regexp/backtrack.go

generated

vendored

Normal file

|

|

@ -0,0 +1,365 @@

|

|||

// Copyright 2015 The Go Authors. All rights reserved.

|

||||

// Use of this source code is governed by a BSD-style

|

||||

// license that can be found in the LICENSE file.

|

||||

|

||||

// backtrack is a regular expression search with submatch

|

||||

// tracking for small regular expressions and texts. It allocates

|

||||

// a bit vector with (length of input) * (length of prog) bits,

|

||||

// to make sure it never explores the same (character position, instruction)

|

||||

// state multiple times. This limits the search to run in time linear in

|

||||

// the length of the test.

|

||||

//

|

||||

// backtrack is a fast replacement for the NFA code on small

|

||||

// regexps when onepass cannot be used.

|

||||

|

||||

package regexp

|

||||

|

||||

import (

|

||||

"regexp/syntax"

|

||||

"sync"

|

||||

)

|

||||

|

||||

// A job is an entry on the backtracker's job stack. It holds

|

||||

// the instruction pc and the position in the input.

|

||||

type job struct {

|

||||

pc uint32

|

||||

arg bool

|

||||

pos int

|

||||

}

|

||||

|

||||

const (

|

||||

visitedBits = 32

|

||||

maxBacktrackProg = 500 // len(prog.Inst) <= max

|

||||

maxBacktrackVector = 256 * 1024 // bit vector size <= max (bits)

|

||||

)

|

||||

|

||||

// bitState holds state for the backtracker.

|

||||

type bitState struct {

|

||||

end int

|

||||

cap []int

|

||||

matchcap []int

|

||||

jobs []job

|

||||

visited []uint32

|

||||

|

||||

inputs inputs

|

||||

}

|

||||

|

||||

var bitStatePool sync.Pool

|

||||

|

||||

func newBitState() *bitState {

|

||||

b, ok := bitStatePool.Get().(*bitState)

|

||||

if !ok {

|

||||

b = new(bitState)

|

||||

}

|

||||

return b

|

||||

}

|

||||

|

||||

func freeBitState(b *bitState) {

|

||||

b.inputs.clear()

|

||||

bitStatePool.Put(b)

|

||||

}

|

||||

|

||||

// maxBitStateLen returns the maximum length of a string to search with

|

||||

// the backtracker using prog.

|

||||

func maxBitStateLen(prog *syntax.Prog) int {

|

||||

if !shouldBacktrack(prog) {

|

||||

return 0

|

||||

}

|

||||

return maxBacktrackVector / len(prog.Inst)

|

||||

}

|

||||

|

||||

// shouldBacktrack reports whether the program is too

|

||||

// long for the backtracker to run.

|

||||

func shouldBacktrack(prog *syntax.Prog) bool {

|

||||

return len(prog.Inst) <= maxBacktrackProg

|

||||

}

|

||||

|

||||

// reset resets the state of the backtracker.

|

||||

// end is the end position in the input.

|

||||

// ncap is the number of captures.

|

||||

func (b *bitState) reset(prog *syntax.Prog, end int, ncap int) {

|

||||

b.end = end

|

||||

|

||||

if cap(b.jobs) == 0 {

|

||||

b.jobs = make([]job, 0, 256)

|

||||

} else {

|

||||

b.jobs = b.jobs[:0]

|

||||

}

|

||||

|

||||

visitedSize := (len(prog.Inst)*(end+1) + visitedBits - 1) / visitedBits

|

||||

if cap(b.visited) < visitedSize {

|

||||

b.visited = make([]uint32, visitedSize, maxBacktrackVector/visitedBits)

|

||||

} else {

|

||||

b.visited = b.visited[:visitedSize]

|

||||

clear(b.visited) // set to 0

|

||||

}

|

||||

|

||||

if cap(b.cap) < ncap {

|

||||

b.cap = make([]int, ncap)

|

||||

} else {

|

||||

b.cap = b.cap[:ncap]

|

||||

}

|

||||

for i := range b.cap {

|

||||

b.cap[i] = -1

|

||||

}

|

||||

|

||||

if cap(b.matchcap) < ncap {

|

||||

b.matchcap = make([]int, ncap)

|

||||

} else {

|

||||

b.matchcap = b.matchcap[:ncap]

|

||||

}

|

||||

for i := range b.matchcap {

|

||||

b.matchcap[i] = -1

|

||||

}

|

||||

}

|

||||

|

||||

// shouldVisit reports whether the combination of (pc, pos) has not

|

||||

// been visited yet.

|

||||

func (b *bitState) shouldVisit(pc uint32, pos int) bool {

|

||||

n := uint(int(pc)*(b.end+1) + pos)

|

||||

if b.visited[n/visitedBits]&(1<<(n&(visitedBits-1))) != 0 {

|

||||

return false

|

||||

}

|

||||

b.visited[n/visitedBits] |= 1 << (n & (visitedBits - 1))

|

||||

return true

|

||||

}

|

||||

|

||||

// push pushes (pc, pos, arg) onto the job stack if it should be

|

||||

// visited.

|

||||

func (b *bitState) push(re *Regexp, pc uint32, pos int, arg bool) {

|

||||

// Only check shouldVisit when arg is false.

|

||||

// When arg is true, we are continuing a previous visit.

|

||||

if re.prog.Inst[pc].Op != syntax.InstFail && (arg || b.shouldVisit(pc, pos)) {

|

||||

b.jobs = append(b.jobs, job{pc: pc, arg: arg, pos: pos})

|

||||

}

|

||||

}

|

||||

|

||||

// tryBacktrack runs a backtracking search starting at pos.

|

||||

func (re *Regexp) tryBacktrack(b *bitState, i input, pc uint32, pos int) bool {

|

||||

longest := re.longest

|

||||

|

||||

b.push(re, pc, pos, false)

|

||||

for len(b.jobs) > 0 {

|

||||

l := len(b.jobs) - 1

|

||||

// Pop job off the stack.

|

||||

pc := b.jobs[l].pc

|

||||

pos := b.jobs[l].pos

|

||||

arg := b.jobs[l].arg

|

||||

b.jobs = b.jobs[:l]

|

||||

|

||||

// Optimization: rather than push and pop,

|

||||

// code that is going to Push and continue

|

||||

// the loop simply updates ip, p, and arg

|

||||

// and jumps to CheckAndLoop. We have to

|

||||

// do the ShouldVisit check that Push

|

||||

// would have, but we avoid the stack

|

||||

// manipulation.

|

||||

goto Skip

|

||||

CheckAndLoop:

|

||||

if !b.shouldVisit(pc, pos) {

|

||||

continue

|

||||

}

|

||||

Skip:

|

||||

|

||||

inst := &re.prog.Inst[pc]

|

||||

|

||||

switch inst.Op {

|

||||

default:

|

||||

panic("bad inst")

|

||||

case syntax.InstFail:

|

||||

panic("unexpected InstFail")

|

||||

case syntax.InstAlt:

|

||||

// Cannot just

|

||||

// b.push(inst.Out, pos, false)

|

||||

// b.push(inst.Arg, pos, false)

|

||||

// If during the processing of inst.Out, we encounter

|

||||

// inst.Arg via another path, we want to process it then.

|

||||

// Pushing it here will inhibit that. Instead, re-push

|

||||

// inst with arg==true as a reminder to push inst.Arg out

|

||||

// later.

|

||||

if arg {

|

||||

// Finished inst.Out; try inst.Arg.

|

||||

arg = false

|

||||

pc = inst.Arg

|

||||

goto CheckAndLoop

|

||||

} else {

|

||||

b.push(re, pc, pos, true)

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

}

|

||||

|

||||

case syntax.InstAltMatch:

|

||||

// One opcode consumes runes; the other leads to match.

|

||||

switch re.prog.Inst[inst.Out].Op {

|

||||

case syntax.InstRune, syntax.InstRune1, syntax.InstRuneAny, syntax.InstRuneAnyNotNL:

|

||||

// inst.Arg is the match.

|

||||

b.push(re, inst.Arg, pos, false)

|

||||

pc = inst.Arg

|

||||

pos = b.end

|

||||

goto CheckAndLoop

|

||||

}

|

||||

// inst.Out is the match - non-greedy

|

||||

b.push(re, inst.Out, b.end, false)

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstRune:

|

||||

r, width := i.step(pos)

|

||||

if !inst.MatchRune(r) {

|

||||

continue

|

||||

}

|

||||

pos += width

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstRune1:

|

||||

r, width := i.step(pos)

|

||||

if r != inst.Rune[0] {

|

||||

continue

|

||||

}

|

||||

pos += width

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstRuneAnyNotNL:

|

||||

r, width := i.step(pos)

|

||||

if r == '\n' || r == endOfText {

|

||||

continue

|

||||

}

|

||||

pos += width

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstRuneAny:

|

||||

r, width := i.step(pos)

|

||||

if r == endOfText {

|

||||

continue

|

||||

}

|

||||

pos += width

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstCapture:

|

||||

if arg {

|

||||

// Finished inst.Out; restore the old value.

|

||||

b.cap[inst.Arg] = pos

|

||||

continue

|

||||

} else {

|

||||

if inst.Arg < uint32(len(b.cap)) {

|

||||

// Capture pos to register, but save old value.

|

||||

b.push(re, pc, b.cap[inst.Arg], true) // come back when we're done.

|

||||

b.cap[inst.Arg] = pos

|

||||

}

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

}

|

||||

|

||||

case syntax.InstEmptyWidth:

|

||||

flag := i.context(pos)

|

||||

if !flag.match(syntax.EmptyOp(inst.Arg)) {

|

||||

continue

|

||||

}

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstNop:

|

||||

pc = inst.Out

|

||||

goto CheckAndLoop

|

||||

|

||||

case syntax.InstMatch:

|

||||

// We found a match. If the caller doesn't care

|

||||

// where the match is, no point going further.

|

||||

if len(b.cap) == 0 {

|

||||

return true

|

||||

}

|

||||

|

||||

// Record best match so far.

|

||||

// Only need to check end point, because this entire

|

||||

// call is only considering one start position.

|

||||

if len(b.cap) > 1 {

|

||||

b.cap[1] = pos

|

||||

}

|

||||

if old := b.matchcap[1]; old == -1 || (longest && pos > 0 && pos > old) {

|

||||

copy(b.matchcap, b.cap)

|

||||

}

|

||||

|

||||

// If going for first match, we're done.

|

||||

if !longest {

|

||||

return true

|

||||

}

|

||||

|

||||

// If we used the entire text, no longer match is possible.

|

||||

if pos == b.end {

|

||||

return true

|

||||

}

|

||||

|

||||

// Otherwise, continue on in hope of a longer match.

|

||||

continue

|

||||

}

|

||||

}

|

||||

|

||||

return longest && len(b.matchcap) > 1 && b.matchcap[1] >= 0

|

||||

}

|

||||

|

||||

// backtrack runs a backtracking search of prog on the input starting at pos.

|

||||

func (re *Regexp) backtrack(ib []byte, is string, pos int, ncap int, dstCap []int) []int {

|

||||

startCond := re.cond

|

||||

if startCond == ^syntax.EmptyOp(0) { // impossible

|

||||

return nil

|

||||

}

|

||||

if startCond&syntax.EmptyBeginText != 0 && pos != 0 {

|

||||

// Anchored match, past beginning of text.

|

||||

return nil

|

||||

}

|

||||

|

||||

b := newBitState()

|

||||

i, end := b.inputs.init(nil, ib, is)

|

||||

b.reset(re.prog, end, ncap)

|

||||

|

||||

// Anchored search must start at the beginning of the input

|

||||

if startCond&syntax.EmptyBeginText != 0 {

|

||||

if len(b.cap) > 0 {

|

||||

b.cap[0] = pos

|

||||

}

|

||||

if !re.tryBacktrack(b, i, uint32(re.prog.Start), pos) {

|

||||

freeBitState(b)

|

||||

return nil

|

||||

}

|

||||

} else {

|

||||

|

||||

// Unanchored search, starting from each possible text position.

|

||||

// Notice that we have to try the empty string at the end of

|

||||

// the text, so the loop condition is pos <= end, not pos < end.

|

||||

// This looks like it's quadratic in the size of the text,

|

||||

// but we are not clearing visited between calls to TrySearch,

|

||||

// so no work is duplicated and it ends up still being linear.

|

||||

width := -1

|

||||

for ; pos <= end && width != 0; pos += width {

|

||||

if len(re.prefix) > 0 {

|

||||

// Match requires literal prefix; fast search for it.

|

||||

advance := i.index(re, pos)

|

||||

if advance < 0 {

|

||||

freeBitState(b)

|

||||

return nil

|

||||

}

|

||||

pos += advance

|

||||

}

|

||||

|

||||

if len(b.cap) > 0 {

|

||||

b.cap[0] = pos

|

||||

}

|

||||

if re.tryBacktrack(b, i, uint32(re.prog.Start), pos) {

|

||||

// Match must be leftmost; done.

|

||||

goto Match

|

||||

}

|

||||

_, width = i.step(pos)

|

||||

}

|

||||

freeBitState(b)

|

||||

return nil

|

||||

}

|

||||

|

||||

Match:

|

||||

dstCap = append(dstCap, b.matchcap...)

|

||||

freeBitState(b)

|

||||

return dstCap

|

||||

}

|

||||

554

vendor/github.com/grafana/regexp/exec.go

generated

vendored

Normal file

554

vendor/github.com/grafana/regexp/exec.go

generated

vendored

Normal file

|

|

@ -0,0 +1,554 @@

|

|||

// Copyright 2011 The Go Authors. All rights reserved.

|

||||

// Use of this source code is governed by a BSD-style

|

||||

// license that can be found in the LICENSE file.

|

||||

|

||||

package regexp

|

||||

|

||||

import (

|

||||

"io"

|

||||

"regexp/syntax"

|

||||

"sync"

|

||||

)

|

||||

|

||||

// A queue is a 'sparse array' holding pending threads of execution.

|

||||

// See https://research.swtch.com/2008/03/using-uninitialized-memory-for-fun-and.html

|

||||

type queue struct {

|

||||

sparse []uint32

|

||||

dense []entry

|

||||

}

|

||||

|

||||

// An entry is an entry on a queue.

|

||||

// It holds both the instruction pc and the actual thread.

|

||||

// Some queue entries are just place holders so that the machine

|

||||

// knows it has considered that pc. Such entries have t == nil.

|

||||

type entry struct {

|

||||

pc uint32

|

||||

t *thread

|

||||

}

|

||||

|

||||

// A thread is the state of a single path through the machine:

|

||||

// an instruction and a corresponding capture array.

|

||||

// See https://swtch.com/~rsc/regexp/regexp2.html

|

||||

type thread struct {

|

||||

inst *syntax.Inst

|

||||

cap []int

|

||||

}

|

||||

|

||||

// A machine holds all the state during an NFA simulation for p.

|

||||

type machine struct {

|

||||

re *Regexp // corresponding Regexp

|

||||

p *syntax.Prog // compiled program

|

||||

q0, q1 queue // two queues for runq, nextq

|

||||

pool []*thread // pool of available threads

|

||||

matched bool // whether a match was found

|

||||

matchcap []int // capture information for the match

|

||||

|

||||

inputs inputs

|

||||

}

|

||||

|

||||

type inputs struct {

|

||||

// cached inputs, to avoid allocation

|

||||

bytes inputBytes

|

||||

string inputString

|

||||

reader inputReader

|

||||

}

|

||||

|

||||

func (i *inputs) newBytes(b []byte) input {

|

||||

i.bytes.str = b

|

||||

return &i.bytes

|

||||

}

|

||||

|

||||

func (i *inputs) newString(s string) input {

|

||||

i.string.str = s

|

||||

return &i.string

|

||||

}

|

||||

|

||||

func (i *inputs) newReader(r io.RuneReader) input {

|

||||

i.reader.r = r

|

||||

i.reader.atEOT = false

|

||||

i.reader.pos = 0

|

||||

return &i.reader

|

||||

}

|

||||

|

||||

func (i *inputs) clear() {

|

||||

// We need to clear 1 of these.

|

||||

// Avoid the expense of clearing the others (pointer write barrier).

|

||||

if i.bytes.str != nil {

|

||||

i.bytes.str = nil

|

||||

} else if i.reader.r != nil {

|

||||

i.reader.r = nil

|

||||

} else {

|

||||

i.string.str = ""

|

||||

}

|

||||

}

|

||||

|

||||

func (i *inputs) init(r io.RuneReader, b []byte, s string) (input, int) {

|

||||

if r != nil {

|

||||

return i.newReader(r), 0

|

||||

}

|

||||

if b != nil {

|

||||

return i.newBytes(b), len(b)

|

||||

}

|

||||

return i.newString(s), len(s)

|

||||

}

|

||||

|

||||

func (m *machine) init(ncap int) {

|

||||

for _, t := range m.pool {

|

||||

t.cap = t.cap[:ncap]

|

||||

}

|

||||

m.matchcap = m.matchcap[:ncap]

|

||||

}

|

||||

|

||||

// alloc allocates a new thread with the given instruction.

|

||||

// It uses the free pool if possible.

|

||||

func (m *machine) alloc(i *syntax.Inst) *thread {

|

||||

var t *thread

|

||||

if n := len(m.pool); n > 0 {

|

||||

t = m.pool[n-1]

|

||||

m.pool = m.pool[:n-1]

|

||||

} else {

|

||||

t = new(thread)

|

||||

t.cap = make([]int, len(m.matchcap), cap(m.matchcap))

|

||||

}

|

||||

t.inst = i

|

||||

return t

|

||||

}

|

||||

|

||||

// A lazyFlag is a lazily-evaluated syntax.EmptyOp,

|

||||

// for checking zero-width flags like ^ $ \A \z \B \b.

|

||||

// It records the pair of relevant runes and does not

|

||||

// determine the implied flags until absolutely necessary

|

||||

// (most of the time, that means never).

|

||||

type lazyFlag uint64

|

||||

|

||||

func newLazyFlag(r1, r2 rune) lazyFlag {

|

||||

return lazyFlag(uint64(r1)<<32 | uint64(uint32(r2)))

|

||||

}

|

||||

|

||||

func (f lazyFlag) match(op syntax.EmptyOp) bool {

|

||||

if op == 0 {

|

||||

return true

|

||||

}

|

||||

r1 := rune(f >> 32)

|

||||

if op&syntax.EmptyBeginLine != 0 {

|

||||

if r1 != '\n' && r1 >= 0 {

|

||||

return false

|

||||

}

|

||||

op &^= syntax.EmptyBeginLine

|

||||

}

|

||||

if op&syntax.EmptyBeginText != 0 {

|

||||

if r1 >= 0 {

|

||||

return false

|

||||

}

|

||||

op &^= syntax.EmptyBeginText

|

||||

}

|

||||

if op == 0 {

|

||||

return true

|

||||

}

|

||||

r2 := rune(f)

|

||||

if op&syntax.EmptyEndLine != 0 {

|

||||

if r2 != '\n' && r2 >= 0 {

|

||||

return false

|

||||

}

|

||||

op &^= syntax.EmptyEndLine

|

||||

}

|

||||

if op&syntax.EmptyEndText != 0 {

|

||||

if r2 >= 0 {

|

||||

return false

|

||||

}

|

||||

op &^= syntax.EmptyEndText

|

||||

}

|

||||

if op == 0 {

|

||||

return true

|

||||

}

|

||||

if syntax.IsWordChar(r1) != syntax.IsWordChar(r2) {

|

||||

op &^= syntax.EmptyWordBoundary

|

||||

} else {

|

||||

op &^= syntax.EmptyNoWordBoundary

|

||||

}

|

||||

return op == 0

|

||||

}

|

||||

|

||||

// match runs the machine over the input starting at pos.

|

||||

// It reports whether a match was found.

|

||||

// If so, m.matchcap holds the submatch information.

|

||||

func (m *machine) match(i input, pos int) bool {

|

||||

startCond := m.re.cond

|

||||

if startCond == ^syntax.EmptyOp(0) { // impossible

|

||||

return false

|

||||

}

|

||||

m.matched = false

|

||||

for i := range m.matchcap {

|

||||

m.matchcap[i] = -1

|

||||

}

|

||||

runq, nextq := &m.q0, &m.q1

|

||||

r, r1 := endOfText, endOfText

|

||||

width, width1 := 0, 0

|

||||

r, width = i.step(pos)

|

||||

if r != endOfText {

|

||||

r1, width1 = i.step(pos + width)

|

||||

}

|

||||

var flag lazyFlag

|

||||

if pos == 0 {

|

||||

flag = newLazyFlag(-1, r)

|

||||

} else {

|

||||

flag = i.context(pos)

|

||||

}

|

||||

for {

|

||||

if len(runq.dense) == 0 {

|

||||

if startCond&syntax.EmptyBeginText != 0 && pos != 0 {

|

||||

// Anchored match, past beginning of text.

|

||||

break

|

||||

}

|

||||

if m.matched {

|

||||

// Have match; finished exploring alternatives.

|

||||

break

|

||||

}

|

||||

if len(m.re.prefix) > 0 && r1 != m.re.prefixRune && i.canCheckPrefix() {

|

||||

// Match requires literal prefix; fast search for it.

|

||||

advance := i.index(m.re, pos)

|

||||

if advance < 0 {

|

||||

break

|

||||

}

|

||||

pos += advance

|

||||

r, width = i.step(pos)

|

||||

r1, width1 = i.step(pos + width)

|

||||

}

|

||||

}

|

||||

if !m.matched {

|

||||

if len(m.matchcap) > 0 {

|

||||

m.matchcap[0] = pos

|

||||

}

|

||||

m.add(runq, uint32(m.p.Start), pos, m.matchcap, &flag, nil)

|

||||

}

|

||||

flag = newLazyFlag(r, r1)

|

||||

m.step(runq, nextq, pos, pos+width, r, &flag)

|

||||

if width == 0 {

|

||||

break

|

||||

}

|

||||

if len(m.matchcap) == 0 && m.matched {

|

||||

// Found a match and not paying attention

|

||||

// to where it is, so any match will do.

|

||||

break

|

||||

}

|

||||

pos += width

|

||||

r, width = r1, width1

|

||||

if r != endOfText {

|

||||

r1, width1 = i.step(pos + width)

|

||||

}

|

||||

runq, nextq = nextq, runq

|

||||

}

|

||||

m.clear(nextq)

|

||||

return m.matched

|

||||

}

|

||||

|

||||

// clear frees all threads on the thread queue.

|

||||

func (m *machine) clear(q *queue) {

|

||||

for _, d := range q.dense {

|

||||

if d.t != nil {

|

||||

m.pool = append(m.pool, d.t)

|

||||

}

|

||||

}

|

||||

q.dense = q.dense[:0]

|

||||

}

|

||||

|

||||

// step executes one step of the machine, running each of the threads

|

||||

// on runq and appending new threads to nextq.

|

||||

// The step processes the rune c (which may be endOfText),

|

||||

// which starts at position pos and ends at nextPos.

|

||||

// nextCond gives the setting for the empty-width flags after c.

|

||||

func (m *machine) step(runq, nextq *queue, pos, nextPos int, c rune, nextCond *lazyFlag) {

|

||||

longest := m.re.longest

|

||||

for j := 0; j < len(runq.dense); j++ {

|

||||

d := &runq.dense[j]

|

||||

t := d.t

|

||||

if t == nil {

|

||||

continue

|

||||

}

|

||||

if longest && m.matched && len(t.cap) > 0 && m.matchcap[0] < t.cap[0] {

|

||||

m.pool = append(m.pool, t)

|

||||

continue

|

||||

}

|

||||

i := t.inst

|

||||

add := false

|

||||

switch i.Op {

|

||||

default:

|

||||

panic("bad inst")

|

||||

|

||||

case syntax.InstMatch:

|

||||

if len(t.cap) > 0 && (!longest || !m.matched || m.matchcap[1] < pos) {

|

||||

t.cap[1] = pos

|

||||

copy(m.matchcap, t.cap)

|

||||

}

|

||||

if !longest {

|

||||

// First-match mode: cut off all lower-priority threads.

|

||||

for _, d := range runq.dense[j+1:] {

|

||||

if d.t != nil {

|

||||

m.pool = append(m.pool, d.t)

|

||||

}

|

||||

}

|

||||

runq.dense = runq.dense[:0]

|

||||

}

|

||||

m.matched = true

|

||||

|

||||

case syntax.InstRune:

|

||||

add = i.MatchRune(c)

|

||||

case syntax.InstRune1:

|

||||

add = c == i.Rune[0]

|

||||

case syntax.InstRuneAny:

|

||||

add = true

|

||||

case syntax.InstRuneAnyNotNL:

|

||||

add = c != '\n'

|

||||

}

|

||||

if add {

|

||||

t = m.add(nextq, i.Out, nextPos, t.cap, nextCond, t)

|

||||

}

|

||||

if t != nil {

|

||||

m.pool = append(m.pool, t)

|

||||

}

|

||||

}

|

||||

runq.dense = runq.dense[:0]

|

||||

}

|

||||

|

||||

// add adds an entry to q for pc, unless the q already has such an entry.

|

||||

// It also recursively adds an entry for all instructions reachable from pc by following

|

||||

// empty-width conditions satisfied by cond. pos gives the current position

|

||||

// in the input.

|

||||

func (m *machine) add(q *queue, pc uint32, pos int, cap []int, cond *lazyFlag, t *thread) *thread {

|

||||

Again:

|

||||

if pc == 0 {

|

||||

return t

|

||||

}

|

||||

if j := q.sparse[pc]; j < uint32(len(q.dense)) && q.dense[j].pc == pc {

|

||||

return t

|

||||

}

|

||||

|

||||

j := len(q.dense)

|

||||

q.dense = q.dense[:j+1]

|

||||

d := &q.dense[j]

|

||||

d.t = nil

|

||||

d.pc = pc

|

||||

q.sparse[pc] = uint32(j)

|

||||

|

||||

i := &m.p.Inst[pc]

|

||||

switch i.Op {

|

||||

default:

|

||||

panic("unhandled")

|

||||

case syntax.InstFail:

|

||||

// nothing

|

||||

case syntax.InstAlt, syntax.InstAltMatch:

|

||||

t = m.add(q, i.Out, pos, cap, cond, t)

|

||||

pc = i.Arg

|

||||

goto Again

|

||||

case syntax.InstEmptyWidth:

|

||||

if cond.match(syntax.EmptyOp(i.Arg)) {

|

||||

pc = i.Out

|

||||

goto Again

|

||||

}

|

||||

case syntax.InstNop:

|

||||

pc = i.Out

|

||||

goto Again

|

||||

case syntax.InstCapture:

|

||||

if int(i.Arg) < len(cap) {

|

||||

opos := cap[i.Arg]

|

||||

cap[i.Arg] = pos

|

||||

m.add(q, i.Out, pos, cap, cond, nil)

|

||||

cap[i.Arg] = opos

|

||||

} else {

|

||||

pc = i.Out

|

||||

goto Again

|

||||

}

|

||||

case syntax.InstMatch, syntax.InstRune, syntax.InstRune1, syntax.InstRuneAny, syntax.InstRuneAnyNotNL:

|

||||

if t == nil {

|

||||

t = m.alloc(i)

|

||||

} else {

|

||||

t.inst = i

|

||||

}

|

||||

if len(cap) > 0 && &t.cap[0] != &cap[0] {

|

||||

copy(t.cap, cap)

|

||||

}

|

||||

d.t = t

|

||||

t = nil

|

||||

}

|

||||

return t

|

||||

}

|

||||

|

||||

type onePassMachine struct {

|

||||

inputs inputs

|

||||

matchcap []int

|

||||

}

|

||||

|

||||

var onePassPool sync.Pool

|

||||

|

||||

func newOnePassMachine() *onePassMachine {

|

||||

m, ok := onePassPool.Get().(*onePassMachine)

|

||||

if !ok {

|

||||

m = new(onePassMachine)

|

||||

}

|

||||

return m

|

||||

}

|

||||

|

||||

func freeOnePassMachine(m *onePassMachine) {

|

||||

m.inputs.clear()

|

||||

onePassPool.Put(m)

|

||||

}

|

||||

|

||||

// doOnePass implements r.doExecute using the one-pass execution engine.

|

||||

func (re *Regexp) doOnePass(ir io.RuneReader, ib []byte, is string, pos, ncap int, dstCap []int) []int {

|

||||

startCond := re.cond

|

||||

if startCond == ^syntax.EmptyOp(0) { // impossible

|

||||

return nil

|

||||

}

|

||||

|

||||

m := newOnePassMachine()

|

||||

if cap(m.matchcap) < ncap {

|

||||

m.matchcap = make([]int, ncap)

|

||||

} else {

|

||||

m.matchcap = m.matchcap[:ncap]

|

||||

}

|

||||

|

||||

matched := false

|

||||

for i := range m.matchcap {

|

||||

m.matchcap[i] = -1

|

||||

}

|

||||

|

||||

i, _ := m.inputs.init(ir, ib, is)

|

||||

|

||||

r, r1 := endOfText, endOfText

|

||||

width, width1 := 0, 0

|

||||

r, width = i.step(pos)

|

||||

if r != endOfText {

|

||||

r1, width1 = i.step(pos + width)

|

||||

}

|

||||

var flag lazyFlag

|

||||

if pos == 0 {

|

||||

flag = newLazyFlag(-1, r)

|

||||

} else {

|

||||

flag = i.context(pos)

|

||||

}

|

||||

pc := re.onepass.Start

|

||||

inst := &re.onepass.Inst[pc]

|

||||

// If there is a simple literal prefix, skip over it.

|

||||

if pos == 0 && flag.match(syntax.EmptyOp(inst.Arg)) &&

|

||||

len(re.prefix) > 0 && i.canCheckPrefix() {

|

||||

// Match requires literal prefix; fast search for it.

|

||||

if !i.hasPrefix(re) {

|

||||

goto Return

|

||||

}

|

||||

pos += len(re.prefix)

|

||||

r, width = i.step(pos)

|

||||

r1, width1 = i.step(pos + width)

|

||||

flag = i.context(pos)

|

||||

pc = int(re.prefixEnd)

|

||||

}

|

||||

for {

|

||||

inst = &re.onepass.Inst[pc]

|

||||

pc = int(inst.Out)

|

||||

switch inst.Op {

|

||||

default:

|

||||

panic("bad inst")

|

||||

case syntax.InstMatch:

|

||||

matched = true

|

||||

if len(m.matchcap) > 0 {

|

||||

m.matchcap[0] = 0

|

||||

m.matchcap[1] = pos

|

||||

}

|

||||

goto Return

|

||||

case syntax.InstRune:

|

||||

if !inst.MatchRune(r) {

|

||||

goto Return

|

||||

}

|

||||

case syntax.InstRune1:

|

||||

if r != inst.Rune[0] {

|

||||

goto Return

|

||||

}

|

||||

case syntax.InstRuneAny:

|

||||

// Nothing

|

||||

case syntax.InstRuneAnyNotNL:

|

||||

if r == '\n' {

|

||||

goto Return

|

||||

}

|

||||

// peek at the input rune to see which branch of the Alt to take

|

||||

case syntax.InstAlt, syntax.InstAltMatch:

|

||||

pc = int(onePassNext(inst, r))

|

||||

continue

|

||||

case syntax.InstFail:

|

||||

goto Return

|

||||

case syntax.InstNop:

|

||||

continue

|

||||

case syntax.InstEmptyWidth:

|

||||

if !flag.match(syntax.EmptyOp(inst.Arg)) {

|

||||

goto Return

|

||||

}

|

||||

continue

|

||||

case syntax.InstCapture:

|

||||

if int(inst.Arg) < len(m.matchcap) {

|

||||

m.matchcap[inst.Arg] = pos

|

||||

}

|

||||

continue

|

||||

}

|

||||

if width == 0 {

|

||||

break

|

||||

}

|

||||

flag = newLazyFlag(r, r1)

|

||||

pos += width

|

||||

r, width = r1, width1

|

||||

if r != endOfText {

|

||||

r1, width1 = i.step(pos + width)

|

||||

}

|

||||

}

|

||||

|

||||

Return:

|

||||

if !matched {

|

||||

freeOnePassMachine(m)

|

||||

return nil

|

||||

}

|

||||

|

||||

dstCap = append(dstCap, m.matchcap...)

|

||||

freeOnePassMachine(m)

|

||||

return dstCap

|

||||

}

|

||||

|

||||

// doMatch reports whether either r, b or s match the regexp.

|

||||

func (re *Regexp) doMatch(r io.RuneReader, b []byte, s string) bool {

|

||||

return re.doExecute(r, b, s, 0, 0, nil) != nil

|

||||

}

|

||||

|

||||

// doExecute finds the leftmost match in the input, appends the position

|

||||

// of its subexpressions to dstCap and returns dstCap.

|

||||

//

|

||||

// nil is returned if no matches are found and non-nil if matches are found.

|

||||

func (re *Regexp) doExecute(r io.RuneReader, b []byte, s string, pos int, ncap int, dstCap []int) []int {

|

||||

if dstCap == nil {

|

||||

// Make sure 'return dstCap' is non-nil.

|

||||

dstCap = arrayNoInts[:0:0]

|

||||

}

|

||||

|

||||

if r == nil && len(b)+len(s) < re.minInputLen {

|

||||

return nil

|

||||

}

|

||||

|

||||

if re.onepass != nil {

|

||||

return re.doOnePass(r, b, s, pos, ncap, dstCap)

|

||||

}

|

||||

if r == nil && len(b)+len(s) < re.maxBitStateLen {

|

||||

return re.backtrack(b, s, pos, ncap, dstCap)

|

||||

}

|

||||

|

||||

m := re.get()

|

||||

i, _ := m.inputs.init(r, b, s)

|

||||

|

||||

m.init(ncap)

|

||||

if !m.match(i, pos) {

|

||||

re.put(m)

|

||||